Second Order Subdomain Takeover Scanner Tool scans web applications for second-order subdomain takeover by crawling the application and collecting URLs (and other data) that match specific rules or respond in a specific way.

Using Second Order Subdomain Takeover Scanner Tool

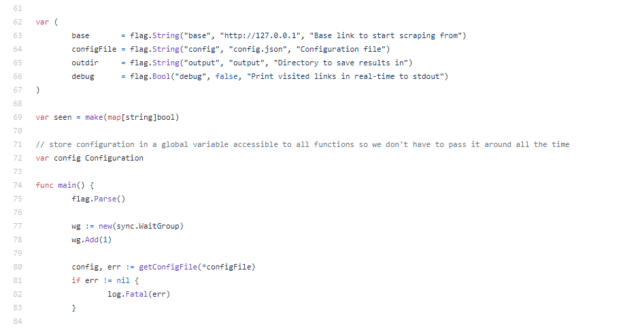

Command line options:

|

1 2 3 4 5 6 7 8 |

-base string Base link to start scraping from (default "http://127.0.0.1") -config string Configuration file (default "config.json") -debug Print visited links in real-time to stdout -output string Directory to save results in (default "output") |

Example:

|

1 |

go run second-order.go -base https://example.com -config config.json -output example.com -concurrency 10 |

Config File for Second Order Subdomain Takeover Scanner Tool

Example configuration file included (config.json)

- Headers: A map of headers that will be sent with every request.

- Depth: Crawling depth.

- LogCrawledURLs: If this is set to true, Second Order will log the URL of every crawled page.

- LogQueries: A map of tag-attribute queries that will be searched for in crawled pages. For example, “a”: “href” means log every href attribute of every a tag.

- LogURLRegex: A list of regular expressions that will be matched against the URLs that are extracted using the queries in LogQueries; if left empty, all URLs will be logged.

- LogNon200Queries: A map of tag-attribute queries that will be searched for in crawled pages, and logged only if they don’t return a 200 status code.

- ExcludedURLRegex: A list of regular expressions whose matching URLs will not be accessed by the tool.

- ExcludedStatusCodes: A list of status codes; if any page responds with one of these, it will be excluded from the results of LogNon200Queries; if left empty, all non-200 pages’ URLs will be logged.

- LogInlineJS: If this is set to true, Second Order will log the contents of every script tag that doesn’t have a src attribute.

You can download Second Order here:

Or read more here.